Another type of out of memory errors in java is - "

java lang outofmemoryerror gc overhead limit exceeded". This is NOT talking about that your machine has no enough memory (heap memory) to run the application, but rather a symptom of a different issue.

According to

Oracle here is the description about on what situations this error is thrown.

"The parallel collector will throw an OutOfMemoryError if too much time is being spent in garbage collection: if more than 98% of the total time is spent in garbage collection and less than 2% of the heap is recovered, an OutOfMemoryError will be thrown. This feature is designed to prevent applications from running for an extended period of time while making little or no progress because the heap is too small. If necessary, this feature can be disabled by adding the option -XX:-UseGCOverheadLimit to the command line."

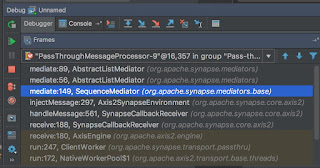

If you are hit with this issue for a java application (i.e a WSO2 Server), it can be a GC tuning issue, or not enough memory issue or else it can be a program level bug. In order to decide, we need to set up some monitoring for the application.

What causes gc overhead limit exceeded issue?

As Oracle states, this issue surface when automatic GC runs too frequently to free up memory in your Java application. After a garbage collection, if the Java process is spending more than approximately 98% of its time doing garbage collection and if it is recovering less than 2% of the heap and has been doing so far the last 5 (compile time constant) consecutive garbage collections, java throws this error to prevent your application from soaking up CPU time without getting anything done so that you have a chance of diagnosing the problem.

What programming issues can cause this?

If Java application creates a tons of temporary objects in memory and loose all the references to them frequently and tons of weakly-referenced objects in an already very memory-constrained environment this issue will surface. The program might need to re-written with a different algorithm reusing created objects as much as possible.

But wait, this can be normal, if memory is not very constrained...!! If there is no obvious programming issue like above, we need to setup monitoring and decide.

Below let us discuss how to monitor GC. For obvious reasons, we need to monitor Heap memory behavior as well.

How to monitor WSO2 Sever application for GC

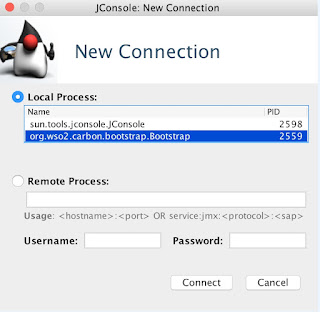

Jconsole

1. Run wso2 server by [HOME]/bin wso2server.sh script.

2. Find process id by using command ps -ef | grep wso2

3. Run jconsole by typing jconsole in commandline

4. Attach process and monitor. You can attach jconsole to a remotely running sever as well. (Refer to server startup log)

JMX Service URL : service:jmx:rmi://localhost:11111/jndi/rmi://localhost:9999/jmxrmi

Here we can see how much time server has taken for GC. You can calculate, out of total up time, GC time should be less than 2% for a healthy running application. The saw tooth memory variation pattern is normal, as Java will do a automatic GC once PS Eden Space is filled.

Above grep was for WSO2 Message Broker 3.1.0 with 2GB Heap allocated. Now I created a memory constrained environment by reducing heap memory size to 500MB. Still application ran, but with more frequent GC happening to gain memory. Here, memory could re-gain by GC process (more than 2%), so there is no OOM error.

- Likewise, if application retained objects permanently in memory over long run, and always keep to occupy a memory near to heap limit, GC happens frequently.

- As objects keep memory (due to some memory leak, or a cache not cleared for a longer time by application), the amount of memory GC can recover keeps getting low in every GC cycle.

- There, if load (usually a high load) put to the server creates temporary objects in memory so fast that GC operations cannot sweep them out, GC overhead limit exceeded error will be thrown.

(Java) VisualVM + Visual GC

Java Visual VM is a GUI profiling/monitoring tool provided by Oracle JDK. Instead of the version that is included with JDK, you can download Visual VM directly from its website as well.

Same as Jconsole above you can monitor attaching to the process or monitor remotely (File >> add JMX connection).

You can get the basic information about GC and Heap from Monitoring tab. But it does not give you detailed information.

To get detailed information install the Visual GC plug-in (use tools menu).

By using Visual GC, you can see the information provided by running jstatd in a more intuitive way.

Using jstat

Jstat is a monitoring application for JVM hotspot. We can collect GC information of a JVM using Jstat.

- Run wso2 server by [HOME]/bin wso2server.sh script.

- Use "jps" command to find "virtual machine ID"

- Use that virtual machine ID with jstat command as below

jstat –gc

running "jstat –gc 1000" (or 1s) will display the GC monitoring data on the console every 1 second.

running "jstat –gc 1000 10" will display the GC monitoring information once every 1 second for 10 times in total.

There are many options other than -gc, among which GC related ones are listed below.

| Option Name | Description |

| gc | It shows the current size for each heap area and its current usage (Ede, survivor, old, etc.), total number of GC performed, and the accumulated time for GC operations. |

| gccapactiy | It shows the minimum size (ms) and maximum size (mx) of each heap area, current size, and the number of GC performed for each area. (Does not show current usage and accumulated time for GC operations.) |

| gccause | It shows the "information provided by -gcutil" + reason for the last GC and the reason for the current GC. |

| gcnew | Shows the GC performance data for the new area. |

| gcnewcapacity | Shows statistics for the size of new area. |

| gcold | Shows the GC performance data for the old area. |

| gcoldcapacity | Shows statistics for the size of old area. |

| gcpermcapacity | Shows statistics for the permanent area. |

| gcutil | Shows the usage for each heap area in percentage. Also shows the total number of GC performed and the accumulated time for GC operations. |

From above options -gcutil and -gccapacity are most helpful.

- -gcutil is used to check the usage of heap areas, the number of GC performed, and the total accumulated time for GC operations.

- while -gccapacity option and others can be used to check the actual size allocated.

For details about the stats denoted by each column please refer to post

here.

-verbosegc

Unlike Jstat, this is a JVM option that you can run with. Meaning, it should be passed as a parameter to JVM at the startup. However, as -verbosegc displays easy to understand output results whenever a GC occurs, it is very helpful for monitoring rough GC information.

The followings are other options that can be used with -verbosegc.

- -XX:+PrintGCDetails (enabled by default)

- -XX:+PrintGCTimeStamps

- -XX:+PrintHeapAtGC

- -XX:+PrintGCDateStamps (from JDK 6 update 4)

Here is the format that information is printed when a minor GC occurs.

[GC [<collector>: <starting occupancy1> -> <ending occupancy1>, <pause time1> secs] <starting occupancy3> -> <ending occupancy3>, <pause time3> secs]

| Collector | Name of Collector Used for minor gc |

| starting occupancy1 | The size of young area before GC |

| ending occupancy1 | The size of young area after GC |

| pause time1 | The time when the Java application stopped running for minor GC |

| starting occupancy3 | The total size of heap area before GC |

| ending occupancy3 | The total size of heap area after GC |

| pause time3 | The time when the Java application stopped running for overall heap GC, including major GC |

Below is for a full GC

[Full GC [Tenured: 3485K->4095K(4096K), 0.1745373 secs] 61244K->7418K(63104K), [Perm : 10756K->10756K(12288K)], 0.1762129 secs] [Times: user=0.19 sys=0.00, real=0.19 secs]

As -verbosegc option outputs a log every time a GC event occurs, it is easy to see the changes of the heap usage rates caused by GC operation.

To enable this in a wso2server edit [SERVER_HOME]/bin wso2server.sh file as below. Note that you can direct output of gc logs to a file specifying -Xloggc option.

$JAVACMD \

-Xbootclasspath/a:"$CARBON_XBOOTCLASSPATH" \

-Xms500m -Xmx1024m -XX:MaxPermSize=256m \

-XX:+PrintGCDetails \

-XX:+PrintGCTimeStamps \

-XX:+PrintHeapAtGC \

-XX:+PrintGCDateStamps \

-Xloggc:/temp/aaa/wso2mb-3.1.0/bin/gclog.log \

-XX:+HeapDumpOnOutOfMemoryError \

Following is a sample output of above exercise.

{Heap before GC invocations=12 (full 0):

PSYoungGen total 272384K, used 238314K [0x00000007eaa80000, 0x0000000800000000, 0x0000000800000000)

eden space 200704K, 100% used [0x00000007eaa80000,0x00000007f6e80000,0x00000007f6e80000)

from space 71680K, 52% used [0x00000007f6e80000,0x00000007f933a948,0x00000007fb480000)

to space 69632K, 0% used [0x00000007fbc00000,0x00000007fbc00000,0x0000000800000000)

ParOldGen total 341504K, used 128961K [0x00000007c0000000, 0x00000007d4d80000, 0x00000007eaa80000)

object space 341504K, 37% used [0x00000007c0000000,0x00000007c7df05b0,0x00000007d4d80000)

PSPermGen total 36352K, used 36237K [0x00000007b0000000, 0x00000007b2380000, 0x00000007c0000000)

object space 36352K, 99% used [0x00000007b0000000,0x00000007b2363598,0x00000007b2380000)

2016-11-20T14:30:29.565-0530: 11.921: [GC [PSYoungGen: 238314K->39257K(270848K)] 367275K->170403K(612352K), 0.0190040 secs] [Times: user=0.06 sys=0.00, real=0.02 secs]

Heap after GC invocations=12 (full 0):

PSYoungGen total 270848K, used 39257K [0x00000007eaa80000, 0x00000007ff900000, 0x0000000800000000)

eden space 208384K, 0% used [0x00000007eaa80000,0x00000007eaa80000,0x00000007f7600000)

from space 62464K, 62% used [0x00000007fbc00000,0x00000007fe256760,0x00000007ff900000)

to space 67072K, 0% used [0x00000007f7600000,0x00000007f7600000,0x00000007fb780000)

ParOldGen total 341504K, used 131145K [0x00000007c0000000, 0x00000007d4d80000, 0x00000007eaa80000)

object space 341504K, 38% used [0x00000007c0000000,0x00000007c80125b0,0x00000007d4d80000)

PSPermGen total 36352K, used 36237K [0x00000007b0000000, 0x00000007b2380000, 0x00000007c0000000)

object space 36352K, 99% used [0x00000007b0000000,0x00000007b2363598,0x00000007b2380000)

}

{Heap before GC invocations=13 (full 0):

PSYoungGen total 270848K, used 247641K [0x00000007eaa80000, 0x00000007ff900000, 0x0000000800000000)

eden space 208384K, 100% used [0x00000007eaa80000,0x00000007f7600000,0x00000007f7600000)

from space 62464K, 62% used [0x00000007fbc00000,0x00000007fe256760,0x00000007ff900000)

to space 67072K, 0% used [0x00000007f7600000,0x00000007f7600000,0x00000007fb780000)

ParOldGen total 341504K, used 131145K [0x00000007c0000000, 0x00000007d4d80000, 0x00000007eaa80000)

object space 341504K, 38% used [0x00000007c0000000,0x00000007c80125b0,0x00000007d4d80000)

PSPermGen total 37376K, used 37048K [0x00000007b0000000, 0x00000007b2480000, 0x00000007c0000000)

object space 37376K, 99% used [0x00000007b0000000,0x00000007b242e080,0x00000007b2480000)

2016-11-20T14:30:30.252-0530: 12.607: [GC [PSYoungGen: 247641K->14407K(275456K)] 378787K->145734K(616960K), 0.0191150 secs] [Times: user=0.03 sys=0.00, real=0.02 secs]

Heap after GC invocations=13 (full 0):

PSYoungGen total 275456K, used 14407K [0x00000007eaa80000, 0x0000000800000000, 0x0000000800000000)

eden space 208384K, 0% used [0x00000007eaa80000,0x00000007eaa80000,0x00000007f7600000)

from space 67072K, 21% used [0x00000007f7600000,0x00000007f8411f40,0x00000007fb780000)

to space 69120K, 0% used [0x00000007fbc80000,0x00000007fbc80000,0x0000000800000000)

ParOldGen total 341504K, used 131327K [0x00000007c0000000, 0x00000007d4d80000, 0x00000007eaa80000)

object space 341504K, 38% used [0x00000007c0000000,0x00000007c803fc50,0x00000007d4d80000)

PSPermGen total 37376K, used 37048K [0x00000007b0000000, 0x00000007b2480000, 0x00000007c0000000)

object space 37376K, 99% used [0x00000007b0000000,0x00000007b242e080,0x00000007b2480000)

}

{Heap before GC invocations=14 (full 0):

PSYoungGen total 275456K, used 222791K [0x00000007eaa80000, 0x0000000800000000, 0x0000000800000000)

eden space 208384K, 100% used [0x00000007eaa80000,0x00000007f7600000,0x00000007f7600000)

from space 67072K, 21% used [0x00000007f7600000,0x00000007f8411f40,0x00000007fb780000)

to space 69120K, 0% used [0x00000007fbc80000,0x00000007fbc80000,0x0000000800000000)

ParOldGen total 341504K, used 131327K [0x00000007c0000000, 0x00000007d4d80000, 0x00000007eaa80000)

object space 341504K, 38% used [0x00000007c0000000,0x00000007c803fc50,0x00000007d4d80000)

PSPermGen total 39936K, used 39471K [0x00000007b0000000, 0x00000007b2700000, 0x00000007c0000000)

object space 39936K, 98% used [0x00000007b0000000,0x00000007b268bd60,0x00000007b2700000)

2016-11-20T14:30:30.924-0530: 13.280: [GC [PSYoungGen: 222791K->3696K(274432K)] 354118K->135555K(615936K), 0.0038710 secs] [Times: user=0.02 sys=0.00, real=0.00 secs]

Heap after GC invocations=14 (full 0):

PSYoungGen total 274432K, used 3696K [0x00000007eaa80000, 0x0000000800000000, 0x0000000800000000)

eden space 205312K, 0% used [0x00000007eaa80000,0x00000007eaa80000,0x00000007f7300000)

from space 69120K, 5% used [0x00000007fbc80000,0x00000007fc01c390,0x0000000800000000)

to space 72192K, 0% used [0x00000007f7300000,0x00000007f7300000,0x00000007fb980000)

ParOldGen total 341504K, used 131859K [0x00000007c0000000, 0x00000007d4d80000, 0x00000007eaa80000)

object space 341504K, 38% used [0x00000007c0000000,0x00000007c80c4c18,0x00000007d4d80000)

PSPermGen total 39936K, used 39471K [0x00000007b0000000, 0x00000007b2700000, 0x00000007c0000000)

object space 39936K, 98% used [0x00000007b0000000,0x00000007b268bd60,0x00000007b2700000)

}

There is a Software called

HPJMeter which can be used to analyze -verbosegc output results graphically.

Solutions to the problem

- If above monitoring tools tells you that there is not enough memory to handle the load put to the server, there is an actual resource limitation. In that case you need to increase heap memory size allocated to JVM. The load might be creating too many temporary objects. And in long run some objects are kept in memory by application for some time, making the situation worse. Following is how we can update memory allocation using JVM parameters. Xms - for minimum limit. Xmx - for maximum limit

- If monitoring tells you that there is a memory leak keeping objects in memory in long run, preventing GC to recover memory (even running frequently when memory brim is reaching), need to fix the memory leak on the first place.

- If load is considerably low and there is enough memory allocated, need to revisit the code how temporary objects are created and used by the application. Choosing to reuse objects instead of creating new ones can reduce the number of times the garbage collector gets in the way.

- GC tuning might be an option as well. Frequent GC from beginning might solve this particular problem given that there is no memory leak (-XX:-UseConcMarkSweepGC will be an option).